Join Scott Gnau from InterSystems at READY 2025 as he unveils the power of InterSystems IRIS, a data platform designed for excellence and innovation. Discover how InterSystems IRIS robust architecture handles complex, multimodal data at scale, serving as the essential "fuel" for reliable AI. The session highlights key advancements, including a pivotal collaboration with Epic Systems and the new Health Module for Data Studio, streamlining healthcare data integration and analytics for trusted AI solutions.

Presented by:

- Scott Gnau, Head of Data Platforms, InterSystems

- Peter Lesperance, Senior Director of Performance Engineering, Epic

- Tom Woodfin, Director of Performance Engineering, Data Platforms, InterSystems

- Gokhan Uluderya, Head of Product Management, Data Platforms, InterSystems

- Jeff Fried, Director of Platform Strategy, InterSystems

- Daniel Franco, Senior Manager, Interoperability Product Management, InterSystems

Video Transcript

Below is the full transcript of the InterSystems READY2025 Keynote.

Different by Design

Scott Gnau, Head of Data Platforms, InterSystems

[00:00] In the few minutes that I have with you this morning, I wanted to spend a lot of time talking about InterSystems IRIS and how we are different by design. Design to us is really critical and a key element behind everything that we think about and implement. As Terry (Ragon) mentioned yesterday in his opening keynote, we are focused on excellence, and excellence starts with a really great design. A design can make or break any technology or product, and it’s something that we focus on very heavily.

[00:37] Some designs are just bad. Those of you from London understand that while this building is kind of neat looking, it melts cars in the street beneath it when the sun comes across it – not really a great sustainable design. We have the Robin here; again, a great design, economical, fewer parts, lighter, easier to construct, but the cornering doesn’t work really well, so it’s not particularly a good design.

[01:08] Some designs aren’t right. Having beautiful grass is really good, and having power in your building is really good, but maybe not having those two things together is better. Other designs are really elegant, but they’re not fit for purpose – like this bridge, beautiful design, except that it kind of resonated with the winds blowing down the river channel and ultimately collapsed.

Read the full transcript

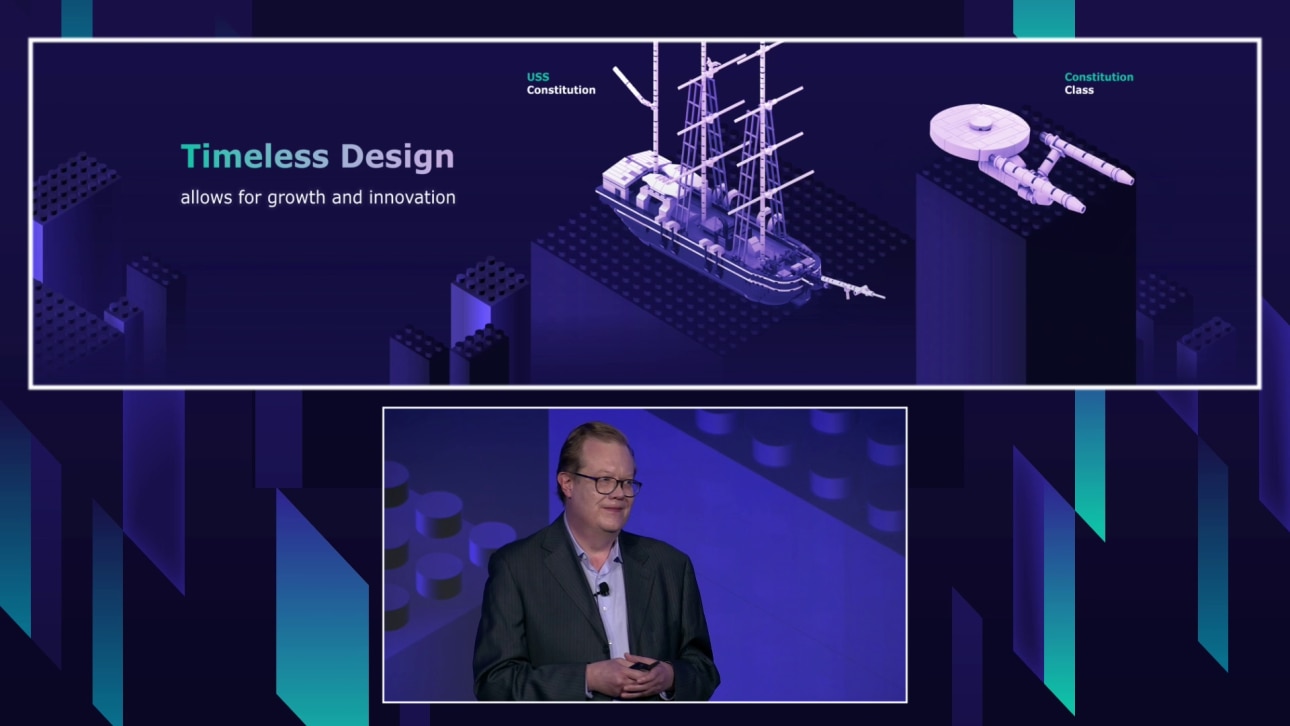

[01:42] Some designs, however, are timeless. Any parents of young children here might think this timeless design is to inflict pain on your feet when you walk through the kitchen and step on them, but in fact, some designs are truly timeless, because they enable you to build out things that you can only have imagined before. Timeless design really allows you to grow and innovate. Think about the simple design of that Lego block: it lets you go from building the Constitution to building the Constitution class.

[02:24] So, what makes a timeless design? I think there are a couple of key characteristics of timeless design. Number one: it’s got to be simple. If it’s too complicated, it's not going to be sustainable. Nobody will understand it. It will break. It will be really hard to support. It's got to be resilient and reusable in many different forms and formats. It has to be adaptable, because our imaginations change, our requirements change, and the world around us changes. This design needs to adapt, and it needs to provide for innovation along the way. These are the core elements that I think are core to any real timeless design.

[03:14] When I think about (InterSystems) IRIS and how we’ve built from the inside all the way out to everything that we deliver, we truly intend for this to be a timeless design. The key elements of this design for us are the core data engine. We weren’t built to be a SQL store, a document store, or an object store. We were really built to store any kind of data very efficiently and very effectively, and to be able to process that data using not only transactions, but analytics and AI. This is a really powerful combination, because it allows you to do many, many things. It allows you to go build out applications that you never thought were possible before.

[04:06] Of course, in data management, interoperability is extremely important, and it's even more important in today’s age with cloud-centric data stores, with internet-connected devices, and with generative AI. Being able to connect, collect, move, and process data seamlessly is a core and important part of the design. And, of course, it has to scale, because you might build your application initially, and then you’ll be really successful in your business. You’ll acquire other companies, and things will grow. You don’t want to have to rebuild those applications, and this adaptability of extreme scale becomes a key differentiation.

[04:51] We like to think about those four key elements of our design as really what we are enabling in a smart data fabric – to be able to connect or collect any kind of data, run any kind of analytics efficiently inside of the engine without moving data, without making copies of data, and with extreme performance and scale.

[05:18] So, that's all interesting, but how does that design fit into how we think we can be of assistance to you? Well, as a technology professional myself – and I think you probably have these same constraints – there are always deadlines, having to get things done. There's never enough money to do everything that you want to do, and there are budget constraints that you have to look out for. And, of course, your users and peers in the business are always going to have new ideas of things that they want to do.

[05:51] Well, this is where the different by design capabilities of (InterSystems) IRIS can really come in handy. When you have deadlines and you have to get things done quickly, this is where our multimodel, multilingual engine really shines – because you can get things done in a single place without having to understand, implement, and integrate multiple different technologies in a workflow. You can simply do it in one simple place.

[06:18] From a budget constraints perspective, we also have a great advantage. The first one is that we allow you to move your processing to your data, and not have to move your data to the processing. That’s a really powerful construct, and I want to explain a little bit more why I think that’s a big deal.

[06:37] Moving data is expensive. It takes time. It removes performance from your application, and it's a hassle. It's expensive because, whether it's on-prem or in the cloud, you've got all this network capacity that you’ve got to provide for. And oh, by the way, when you're moving data around, it requires intervention – because sometimes network connections go down, and you've got to go figure out where your data have gone.

[07:08] It takes time. Of course, you're limited by the speed of light, but it adds latency to the decisions that you make. If you're moving data from one place to another, it's very hard to actually take action in the moment because you're waiting for the data to arrive at its destination, to be processed, and then take action. So, it adds latency.

[07:23] It's a hassle because when things break, when they need to be maintained, you've got to run around and synchronize and understand where your data are. And, it makes it harder to manage the security and privacy of your data, because once it's gone, you don't know all the time what happened to it, what was done with it, where did it go after that.

[07:49] Conversely, being able to move your processing to your data, you save all of that latency. It's highly secure, highly private, highly efficient, and even if the software itself wasn't performant, it would be faster because you don't have the latency of waiting to move the data. And, of course, our software is highly performant as I mentioned before.

[08:03] The efficiency of the core data engine is also a really big deal. You can use fewer resources, which means less CPU cycles, less power, less heating, less cooling – to get the same job done.

[08:23] Because of the integrated platform, your long-term costs are also going to be optimized because it's easier to maintain. It's a single place. You'll meet John Paladino tomorrow morning – you have a single phone number to call if something breaks. It's very easy and cost-effective to maintain over the many years of deployment. And ultimately, you have fewer copies of data to manage, which is a real ROI because storage is not free.

[08:53] And then finally, on new requirements – and of course, in this conference, we talk a lot about AI and all of the AI capabilities that we've added to (InterSystems) IRIS – and that is just one example of innovations that we deliver that allow you to meet new requirements in applications that you've built.

But one of the biggest opportunities we have here is that with (InterSystems) IRIS and its connectivity and interoperability engine, we allow you to see through the silos of data in your organization, to get those new answers, to find those whitespace applications – to drive better revenue and better cost optimization inside your business. And, of course, you can do that now with the extension of AI capabilities that we've built into the products.

[09:37] Our design is different. It gives you a lot of capabilities and advantages. Hopefully, many of you have taken advantage. And, we’re building on top of that core foundation and that core design to continue to innovate and provide value.

In 2025, we’ve added additional data format support and capabilities around vector, which is, of course, a key implementation area for generative AI and RAG models and RAG implementations of your applications. You’ll see some demos later of how we’re able to do that. We continue to focus on that core efficiency and performance improvements so you can either do more with less, or you can do more with the same. And, year over year, release to release, we have seen an average of about 20-25% throughput performance improvement and CPU utilization improvement in our (InterSystems) IRIS releases. And, we’ll continue to look for areas where we can optimize and make that engine even more sophisticated and more efficient, so you get additional payback from the investment that you’ve made.

[10:40] And, finally, we're trying to make it easier to use. The first “I” in (InterSystems) IRIS stands for intuitive, and we’re releasing – and have released – a number of Data Fabric Studio offers, which are a low-code, no-code way to access all of the power inside of (InterSystems) IRIS’s different by design engine.

[11:09] So, where I started this was by talking about design, and that some designs are timeless. And, you can go from that simple block, which might have been the capabilities that you originally acquired from us many years or even decades ago, and with what we've delivered I think you can now build, confidently, an F1 race car. We'll continue investing so that you can go beyond that and actually build your own spaceship.

That's our commitment to the investment in the technology. And, because we have that core foundation with a timeless design, we'll be able to deliver these things to market ahead of our competitors, which will give you an advantage in the applications that you're building for your constituents.

[12:01] So, (InterSystems) IRIS is different by design. We have a full lineup this morning. I'm going to welcome to the stage Pete from Epic and Tom from InterSystems. Later on, you'll hear from our Head of Product, Gokhan Uluderya.

Pete Lesperance, Senior Director of Performance, Epic

Tom Woodfin, Head of Development, Data Platforms, InterSystems

[12:20]

Scott: Welcome.

Pete: Thanks for having us.

Scott: Thank you for being here. I'm going to start with you and ask: what should we know about Epic?

[12:35]

Pete: Sure, so the important thing to know is that we develop an integrated suite of healthcare software that's used by about 650 customers worldwide currently. That includes some names you may have heard of – like Kaiser Permanente, and Mayo Clinic, and Mass General, and then AdventHealth here in Orlando. But even if you haven't heard of Epic, you might have heard of our patient portal. How many of you have ever logged into MyChart?

Okay, a good number of you. So, you have actually accessed your data on an (InterSystems) IRIS database. And, really the core strength of our software is that it's a single integrated system with all of our apps built around a single patient record. And, really what that means for healthcare organizations is that all of their users – whether they're clinical, or operational, or financial – are all working on the same system. And that no matter where a patient is seen, or who is working with them, they're all working with that same core patient record.

[13:31] I think we really heard some examples in yesterday's keynote of why that's so important. And, really with your accessing your own record on MyChart, you are actually looking at the exact same bits and bytes of data that your healthcare provider would see if they opened your record up in Epic.

[13:57]

Scott: Great. So, you work with some of the largest healthcare organizations. How does (InterSystems) IRIS help you support those big customers?

[14:03]

Pete: Yeah. So, I think you kind of talked through a bunch of those things in your opening here. But, the short story is that (InterSystems) IRIS really handles complex, multi-dimensional data really well – and at scale and efficiently. And, then also it guarantees consistency of that data all along the way. And, I think if you think about your encounters with a healthcare system, you can see why that’s important.

So, for example, think about your last clinic visit – what was entailed in just that simple visit you might have done to the clinic? You called or logged into MyChart and scheduled time with your provider. You maybe filled out some pre-visit questionnaires. You maybe signed some electronic consents. And, then you arrived and got checked in at the front desk or at a kiosk. The nurse took you to the exam room. The nurse took your vitals, and reviewed your medications with you. Then, the physician came in, examined you, and documented their findings, wrote a note, placed orders. And then, those orders had to be routed to another person, another provider, another workflow – whether it's a lab tech, or a radiologist, or a pharmacist. So, really just many types of data that are built around that single patient record. And then, every step along the way, you and your care providers are depending upon that data being accurate and up-to-date.

[15:38] So, a good example is if you’re hospitalized, it becomes even more critical – where a physician is placing medication orders for you that are critical and sensitive. The pharmacy is filling them in the hospital pharmacy, and the nurse is administering them. And, all along the way, your life literally is depending on that data being 100% accurate and up-to-date, even if multiple people are working with that medication at the same time.

[16:12] Now, let's take all that, and imagine that complexity, but then scale it up. Imagine dozens of hospitals, and hundreds of clinics, and tens of thousands of concurrent users, and millions of patients that are all on one system. And, that’s really the scale of the largest Epic customers today. The way that we've been able to make that work long-term is that as our customers have grown their business and we've increased the capabilities of our software – you have really been there alongside us, growing the capabilities of the data platform to stay ahead of that curve.

[16:51] A really good example of this is from when I first joined the performance engineering team back in 2019. At that time, we were in discussion with a few very large organizations. And, when we did our modeling and projections for these organizations, we saw that they were going to be 100 million global references per second in size at their full roll out. And, that was a little bit of a problem because at that time, the largest system we could support was around 30 million.

We really felt strongly that those organizations, for the benefit of their patients, for the benefit of their users, it was going to be best if they could stay on a single instance of the database. So, we engaged with you and worked very closely, and collaborated on all of those bottlenecks in the architecture, breaking them down, and iteratively working through them. And, within months, we were actually running lab tests beyond that 100 million mark.

And, today we have about four customers who are successfully running above 100 million global references per second, and they all have excellent performance for their end users.

[18:00]

Tom: And, that kind of incredible progress is really a virtuous cycle that benefits both Epic and the entire (InterSystems) IRIS ecosystem. So, Epic’s real-world scaling – and I think it’s fair to say, with the Epic cloud processing trillions of Grefs every day – it's become hyperscaling. That scaling continuously pushes (InterSystems) IRIS to its limits, and what we learn together allows us to lift those limits higher and higher, release after release, year after year. And, those benefits add up for all (InterSystems) IRIS users across our ecosystem. A financial services customer can process the day’s trades more efficiently. That saves them on cloud costs. And, a retail customer can use the additional scalability headroom to build a smarter ERP system with vector search and AI.

And, as Peter described, Epic can add new innovations to their software and onboard larger and larger customers onto a single real-time, consistent platform. And, that demands more scale from (InterSystems) IRIS, and the cycle continues.

[18:55]

Scott: So, Tom, maybe you can talk about how we partner and collaborate with Epic.

[19:01]

Tom: Sure, the foundation of our work together is developer-to-developer interaction. InterSystems and Epic were both founded by developers. It’s in our DNA. We want to get our hands dirty on the CPUs and in the code.

Now, Epic’s team has built out at their headquarters in Verona, Wisconsin, a really state-of-the-art testing lab, and alongside it a highly accurate simulation driver for their workload. So, once every few months, we virtually ship over the latest in-development (InterSystems) IRIS, plug it in, and start turning up the dial on load. Nowadays, that means bringing online thousands of cores across massive ECP clusters. I have heard rumors about brownouts in the greater Madison area when we try this.

What we’re looking for is linear scalability. The more cores we throw at Epic’s workload, the more throughput we get, the more users we can serve. And if at some extreme load, we see that curve start to flatten out, we dive in with our kind of profiling tools and look for bottlenecks. And, you can really see the gains of this approach in the performance and scalability of recent (InterSystems) IRIS releases.

[19:54] So together, Epic and InterSystems, we’re climbing the curve, but I think Peter and I both understand we really can’t afford to be complacent. The vertical gap between what we achieve in the lab and Epic’s largest customers appears pretty significant today. But, if you look at the horizontal gap in time, we’re really only a couple of years ahead of the impressive growth of Epic’s user base and the capabilities they depend on.

[20:12]

Pete: Yeah, I think Tom did a good job covering our joint high-scale testing efforts that we do about once a quarter. But, there are a lot of other touch points that we’ve had, some of which we've had for over 20 years. So, one example of this is that every two weeks, the performance engineering team and the kernel team meet, and we use that as an opportunity to exchange information – so, talking through test results, experimental builds that are available, and test schedules for that. And, we really exchange ideas there on what should we be doing next in order to keep ahead of that curve.

We also have a quarterly project review meeting where leadership gets involved, and we talk about what the priorities are, and make sure that we’re all on the same page. And, then we also have our biannual, or every six month performance and availability meetings.

[21:10] So, in the spring, you came to the Epic campus in beautiful Verona, Wisconsin, and then we come to Boston in the fall. What I really like about that conference is it’s a chance to meet face-to-face, be in the same room. And, it also brings together people in all different roles across our two companies. It’s not just developers who are there; it’s also people in quality, people in support, and then of course people from leadership. And then, we collaborate on what we’re going to work on together over the coming year. That cadence of communication has been what’s allowed us to sustain this progress over the years.

Tom talked a lot about the scalability improvements that we’ve been working on together, but there’s also been incredible improvements in compute efficiency. We’ve been collaborating on that as well. What we’ve been doing is we will profile our code running on top of (InterSystems) IRIS. We send that information to the kernel team. The kernel team looks at it and tries to find opportunities for optimization, sends us an experimental build, we test it, we give them feedback, and we keep doing this iterative cycle, trying to squeeze out efficiency gains in our code running on top of (InterSystems) IRIS.

[22:22] There’s a number up here. This is actually just from the move from (InterSystems) IRIS 23 to (InterSystems) IRIS 24. But, since our customers have moved from Cache A to the latest version of (InterSystems) IRIS, they’ve actually seen a cumulative improvement somewhere around 100% in terms of both CPU utilization and response times. That’s my plug to all of you: if you’re still running on Cache, please look to move to (InterSystems) IRIS and the latest versions of (InterSystems) IRIS, because you will see those compute utilization benefits.

[23:02] The other thing I’ll say is that the exchange between us goes both ways. For example, when we built the simulator that Tom just talked about – that was over 20 years ago – we got a lot of input from InterSystems when we planned that out. We also take your feedback on coding style, and we work that into our libraries and our developer education. And, another important thing is that over the years, you’ve really helped us with how we design the database architecture to be resilient – like high availability strategies, disaster recovery architectures – and that’s been really critical because downtime costs both money and even lives in healthcare.

[24:00]

Scott: So, Tom, where do you see the collaboration going from here?

[24:05]

Tom: Well, obviously, raw scalability is a huge part of the story, but as Peter discussed, it’s really much broader than that. Epic is on the leading edge of trends that are happening across our industry, and we want to do everything we can as a technology partner to help them keep forging ahead. So, for example, last year we had our first joint Epic-InterSystems hackathon focused on Kubernetes and containerization. And, beyond embracing the cloud deployment model, we’re collaborating on the building blocks of cloud architecture for elasticity, agility, and manageability.

And, similarly, I think we both see the promise of AI as the next generation of compute, enabling capabilities that go way beyond chatbots. We believe it’s going to be pervasive, and it's going to be delivering features of value to users in just about every way they interact with powerful applications like Epic. So, we’re working closely with Epic R&D to understand and complement their architectural strategies for AI. I think that's potentially a huge story that’s just getting started.

[24:53]

Pete: Yeah, a couple of other things that come to mind for me. We’ve been working with Tom closely on ECP manageability. We have a lot of customers deploying ECP. We’re trying to make that an easier experience for them. In fact, Tom and I spent dinner one night at the PNA meeting last fall talking about that. Security and encryption obviously are always on everyone’s mind. I think there’s some work we need to do there, especially in response to some regulatory requirements that are coming up.

And, then there’s just some cool stuff out there on the technology front. There's accelerators both on and off chip like DPUs and NPUs and all those sorts of things that we should be collaborating to see how we can better utilize. And, then there’s stuff that’s way out there like CXL 3.0, and decomposed systems, and shared memory across hosts and all sorts of fun things that we’ll be talking about for years to come.

[25:53]

Scott: So, there’s going to be a lot to do.

[25:59]

Pete: Yes, for sure.

Scott: So, thanks for the shout-out about upgrading to (InterSystems) IRIS. I appreciate that. Epic customers stay up to date on (InterSystems) IRIS versions – can you tell us a little bit about how you do that?

[26:04]

Pete: Sure. So, the latest version of (InterSystems) IRIS that we’ve made available to our customers is (InterSystems) IRIS 2024. And, I think you can see the number here – we’re at 84%. This is actually a stale number. I got that about a week ago. I reran it yesterday – it’s 86% of Epic customers are live on (InterSystems IRIS) 2024.

The way that we make this happen is that we’re very specific with our platform guidance for our customers. So, we have something that we call the target platform. And, in that target platform, we lay out what we want our customers to be on in terms of the hardware and software to support all of the Epic components, but especially the (InterSystems) IRIS database. And, in that target platform, we give a range of compatibility with Epic versions, so every Epic version is compatible with the most recent two (InterSystems) IRIS releases.

And, then we’re pretty aggressive with our customers about upgrading their Epic version. But the result is our customers are upgrading – almost all of them – at least twice a year. And so, the (InterSystems) IRIS version kind of comes along with that. That’s not really the full story, though. I think with (InterSystems) IRIS 24, those compute improvements that we saw a few slides ago are a big part of the story as well.

I’ve actually talked to customers where they were staring down the need to upgrade their hardware, and they were actually able to actually put that off just by upgrading their (InterSystems) IRIS version instead.

[27:55] A couple of other things – obviously, the scalability benefits we’ve been talking about are important to our largest customers, and they get that benefit from upgrading (InterSystems) IRIS. But, then there are also new tools that come with (InterSystems) IRIS versions that help with things like database management. I'm thinking of DataMove, which is a tool some of our customers have started to deploy recently.

[28:19]

Tom: And, this hasn’t been a one-way street either. We’ve been working on the InterSystems side to become more predictable in our release calendar and to more quickly support new underlying OS releases like Ubuntu, Red Hat, so that Epic and their customers can cycle more rapidly onto the latest, most secure versions.

So, we keep coming back to this collaboration as a synergy that helps both teams build better software for our developers and for our users. It really is. And, it’s a model we at InterSystems we see over and over again with our most effective customer partnerships. So, if you’ve got Epic-scale ideas for your technology, please come find me. I’ll be in the Tech Exchange. I’m certain we can make them real together. I think our collaboration with Epic is the best possible proof point of that.

[29:01]

Scott: Great. Well, thank you both. Next to the stage is Gokhan Uluderya.

AI Era Runs on Data

Gokhan Uluderya, Head of Product, Data Platforms, InterSystems

[29:21] Good morning, everyone. I’m super excited to be here, but also to have this great opportunity to talk to you all about the great innovation that we’ve been delivering in data platforms. I said data and innovation in the same sentence. I’m sure a lot of you are thinking we’re going to talk a lot about AI – and I promise, you will not be disappointed.

[29:53] There’s no doubt we’re going through a new era right now. We are in the midst of the new AI age, and everybody is trying to take advantage of AI-driven innovation. We’re all searching for trusted partners and technologies that will help us go through this enormous transformation. And, at InterSystems, we’ve been hard at work continuing to deliver the trusted data and AI platform you all love and use – and we thank you for that.

[30:24] There’s definitely a lot of hype around AI. The hype is very real, but so is the value and the opportunity that is real as well. And, the landscape is changing so fast with agentic AI in play that expectations are ever-growing. But, now is the time to show value and results. We have been talking about this for a while; now we need to show the return on the investments we’ve all been making.

Let’s do a quick show of hands: how many of us are actively working on an AI project or an AI-related outcome? I know I am. Well, quite a few, and I think we can all agree that there’s a lot of pressure across our organizations at all levels – from CEOs to developers – to basically show AI-driven results.

[31:21] AI and generative AI are very promising,very powerful, and can be quite transformative – used for personalization, automation, and decision-making. But the reality is many AI initiatives fall short of expectations and fail to yield the return on investment that we're expecting. Creating an impressive demo might be easy, but building a real-world solution that actually works could also be very challenging.

According to a recent Gartner survey – and you can see the numbers on the screen – more than 30% of generative AI projects are expected to not go beyond the POC (proof-of-concept) stage. And, half of AI initiatives are expected to take longer than eight months to get to production – if they ever make it. This is pretty surprising.

This almost feels like that $20 million Formula 1 race car that we've been speaking about not go beyond the first pit stop. It could be very disappointing and very frustrating for a lot of people. So, there are some key challenges and issues that we need to overcome. Building a real-life AI solution requires AI-ready data, because even the most intelligent system will yield poor results with low-quality input.

[33:03] Decisions made by AI are probabilistic by definition. They will be inaccurate at times. LLMs will hallucinate. They do hallucinate today. They will continue to hallucinate tomorrow. This can be a significant challenge in real business settings, real business environments, where our systems must yield accurate, trusted results with responsible, ethical governance of data and AI. Achieving this outcome might require integration of many complex systems together. And, it can also get very expensive, because now, we're using external services, AI services, and LLM services in our architectures.

[33:54] So, we must understand one very important point. There is really no good AI strategy without a great data strategy. Data is the fuel to the AI engine – and with low-quality, low-octane fuel, your engine will sputter or even worse, completely fail. Well, the good news is this is actually where our products really shine. Our differentiated platform can be a key enabler for you. Our unique multi-model data platform architecture is back in the spotlight because the generative transformers, the AI agents, the LLMs, can process all modalities of data: structured, unstructured, and semi-structured. All of it.

Ten years ago, we used to do AI mainly on relational data. At least that was the mainstream. Advanced solutions using unstructured data as well, but LLMs changed that game plan. Now, if you think about a doctor making a decision about a patient, they need to look at the relational data in Epic, and they need to look at the X-ray results or the MRI results that come in image form, the lab results in document form, and even their own handwritten notes on a piece of paper.

[35:21] For a retailer to make a product recommendation – if you go to Amazon.com, you get product recommendations – how do they do that? They need to build a unified 360-degree view of the customer, and also track the activities of their customers across all channels: online, in the store (brick and mortar), and also social sites – activities on Instagram, and TikTok, and Facebook. All of that data needs to come together for them to make the right decision about us or make the right product recommendation.

So, our real-time data platform acting as your data fabric can really help you build that AI-ready data corpus. With our industry-leading integration, interoperability capabilities, and also AI and analytics functionality, you can reduce cost and complexity significantly. And, last but not least, our data platform acting as your common control and governance plane can help you build the trusted solutions that you and your customers desire.

[36:34] Well, basically for us to succeed in the long term, we really need to cut through the hype and see what’s beyond it. And, our platform acting as that bridge that will take you from heightened expectations to real productivity, you can really avoid the disappointment of the disillusionment phase that a lot of people in the industry are going through nowadays.

And, we have been continuing to innovate to maintain our difference and leadership. Since the last time we spoke at Global Summit last year, we continue to invest in our core data platform, build more analytics and AI capabilities, and also give you more new, modern experiences that actually make consuming data and AI technologies a lot easier. These enable you to build the right strategy and the right solutions in a cost-effective, reliable, trusted way. And now, I think I'll dive into each layer and talk about what’s new in each area.

[37:50] So, when you look at the core data management layer, AI and analytics capabilities empower you to harness the power of your entire data ecosystem and enable you to build trusted LLM integrations, AI agent integrations, reducing hallucinations and increasing accuracy.

You can now manage your data more effectively and cheaper with simplified database operations. Capabilities like table partitioning enable you to build advanced data storage techniques. Storing your warm and important data in premium storage and unused cold data in cheap storage can help you reduce costs significantly, but also at the same time, can make your systems a lot more performant.

And, you now have more cloud-native data services at your fingertips, with security, privacy, governance, trust embedded and throughout the entire platform.

[39:08] Our platform gives you all the capabilities you need to build agentic solutions out of the box – all you need for RAG in the box available in one platform to build trusted LLM-based, agent-based solutions with less hallucinations and better accuracy and results. We have not only the tools that enable you to build AI solutions, but also AI agents that help you carry out complex tasks such as building complex queries and transformations, and even visualizations.

[39:48] And, for the end user, AI assistants help them analyze large amounts of data – the news, research data, SEC filings for financial decision-making – and drive insights and actions out of them.

[40:08] Well, efficiency and getting things done faster have never been more important in the data and AI space, and I think we all feel it. We continue to strive for intuitive, easy-to-use experiences and also meeting our users where they are and where they want to be.

Professional developers can use their most favorite IDEs, such as VS Code, with the programming language they prefer – Python, in addition to .NET and Java.

AI developers and data scientists can use native notebook experiences within their IDE VS Code to build and manage AI models.

For all builders, analysts, and integrators, our low-code, no-code, AI-assisted experiences in Data Fabric Studio, with domain-specific capabilities, empower you to get to your results a lot faster. And, agentic experiences embedded throughout will help you harness the power of data and AI and make everybody even more productive.

Now, I would like to invite Jeff Fried, Director of Marketing and Platform Strategy, to tell us more about agentic strategies and how some of our customers are using these technologies to build some amazing real-life solutions. So, please welcome Jeff Fried.

Speeding Your Journey to Trusted AI

Jeff Fried, Director of Product Marketing and Platform Strategy, InterSystems

[41:45]

Jeff: Good morning. As Gokhan has just taken you through, InterSystems is doing some amazing work on a lot of fronts, especially in AI. This ranges from features for developers and developer environments to subsystems for solution developers to fully built applications. And, you’ll see a number of them right on the stage this morning, and many more as you go through sessions and to the Tech Exchange.

I’m going to focus on one particular one: the integrated vector search. How many people have been using this already?

It’s pretty exciting to see the uptake. We introduced this a little over a year ago in March, and we’ve had just really fast uptake through the customer base. Just as a reminder, if you’re building a Gen AI system, you can build a demo very quickly, but getting it to really perform in a mission-critical application is much tougher. And, one of the big problems is that the data that goes into large language models can be irrelevant, can be stale, and is certainly not under your control.

[43:22] I’ve built a little system that lets you look at LLMs side by side and different techniques. And, this is just directly to an LLM. If you ask anything, what their cutoff date is, you can see that this is stale data. This is almost 18 months old. And, all models have this challenge. It's expensive to build. So, you're dealing with out-of-date data in the model and when you ask a question: “When did Pope Francis die?” According to the model and AI system, he’s alive and well. So, out-of-date data gets you wrong answers.

[44:06] There are other ways you can get wrong answers as well. I was wondering in Orlando about the problem you might have heard of – with killer rabbits. And ChatGPT tells me all about it. But frankly, I just totally made this up. This is fiction, and it’s a hallucination. So, using a large language model has that problem. You can get wrong answers, even wildly made-up answers.

And, if I compare side by side the technique of RAG and I look for what is the freshness of the data, the response you get if you’re using RAG is as fresh as your data. So, the data that I put into the system is a set of papers about RAG, just to be a little bit meta about it. And it’s as fresh as a month ago. If I look at some of the papers, I get detailed results if I’m using a RAG system, and I get vague results, and you can go look up this yourself, using an LLM by itself. So you get the idea that LLMs by themselves present problems because of data issues. And, your AI is only as good as your data.

[45:36] Now, the technique of Retrieval-Augmented Generation is what it sounds like. You’re essentially retrieving data under your control and sending that to the large language model, saying: “Answer this question, but only use this data.” This is a super fast-evolving field. Having loaded a lot of these papers into a demo system, I can tell you the first published paper about Retrieval-Augmented Generation was May of 2021, so about four years ago. In 2022, there were 10 papers. In 2023, 93 papers. In 2024, 1,207 papers. And we’re now at about 120–130 per month.

This is a field that is moving very fast, evolving quite quickly, and InterSystems is staying with the state of the art. Our focus strategically is making Gen AI easy for you to add to your applications. And let me show you a little bit of that.

[46:55] The current top state-of-the-art technique for using RAG is called Graph RAG. It’s using graph structures and knowledge graphs to inform the retrieval process. Just to give you a sense of the progression release-on-release, our development team is adding in new features. Since we first added the vector search capability, we’ve added in adapters to the most common orchestrators so that you can quickly use this in combination with multiple models and multiple systems. You’ll see that in the Tech Exchange as well as in the vector search session today.

We’ve built in embedding generation so that it’s very easy for you to just create an embedding – a vector representation – of the data when you first create the data, and to update it when you update things. So you don’t have to worry about data synchronization.

We’ve built in hybrid search. You can use all the tools you’re familiar with in SQL and combine that with the semantic matching that you get from the use of vectors. And, we’ve added approximate nearest neighbor index. So, that’s a way to get fast matching across very, very large amounts of data.

[48:22] So, release after release, we’re staying current. And I’ll show you a Graph RAG system. This is a knowledge graph of these papers about RAG. It looks very sort of science-fictiony, so you might not build this into your application particularly, but there are concepts like this is large language models as a concept (it’s quite big). This is knowledge graphs. They are connected to papers about them, and to authors of those papers, and to the organizations those authors belong to, etc.

So, that structure allows us to answer questions more precisely. For example, I can ask about combinations of topics – what about LLMs and knowledge graphs? And, I get very specific papers about them, and I can explore the knowledge graph just in the neighborhood of the question I had.

I can also ask questions based upon the structure of that graph – how many links there are, how far away two things are in a knowledge graph. So, for example, who is the author that’s most common? And, I see three authors who have the most links to papers, and I can see how often they’ve worked together.

[49:50] If I compare that side by side with a classic RAG system, and I ask the same question – who’s the most common author? – a RAG system just won’t know, because it doesn’t have that structure to work with. So you can see at the bottom left, the RAG system is saying, “I don’t know.”

So, graph RAG is one of a set of techniques, but the most state-of-the-art one, (InterSystems) IRIS, is a great way to build a Graph RAG system because the global structures allow you to build – I call them designer data structures like graphs. And, the built-in vector capability lets you do this RAG structure very, very effectively and efficiently.

So that gives you a sense that we’re really focused on this topic and on making RAG easy for you to add to your applications. I’m going to show you a couple of customers that have done that, that are in production. And, I’m picking on people that are here, so that hopefully you can meet them and learn from them.

[50:58] Holger, Ditmar, and Fabio from Agimero in Germany are here. Come see them after this session and ask them.

They won an Impact Award for a solution called Guided Agentic Procurement – really trying to shorten the procurement cycle in a supply chain from weeks to hours. Their toolkit includes the InterSystems Supply Chain Orchestrator, InterSystems IRIS, and vector search. They were able to add vector search into their procurement system, which looks like an e-commerce kind of system. You can get a sense of why semantic search would help in this application – to save approximately €2 million per year.

[52:05] Now, one of the things that really impressed me about this was that Fabio told me it took him 36 hours to build this – including creating all the embeddings and going through multiple cycles. It was really easy to add to their existing application, and they were in production in two weeks. So, very quick time to value – in this case, simply adding vector search to get better semantic matching for your application and then layering LLMs, agents, etc., on top of that.

[52:47] Let me highlight another one. This is a company called IPA, whose subsidiary BioStrand has really gotten deep into biological engineering and complex data analysis. Dirk is also here.

So, BioStrand IPA won an Impact Award last year for this application called Lens AI, whose focus is speeding drug discovery, and they’ve been able to speed up drug discovery, for example, antibodies and vaccines by a factor of a hundred or more. They use vector search and RAG in a very creative way. It’s not just the text. It’s not just the images or the videos. It’s the protein structures, it’s the genome structures, which then can be encoded. So, the multimodal capabilities of InterSystems IRIS come to play just like the multimodal capabilities of most modern LLMs. Their application looks like this, and I do think this is the first RAG system that works on biological sequences, and we’ll see more things like this.

So, the multimodal integration allows you to search for sequences to match between, let’s say, protein sequences and literature about them, to look for associated pathways, and to build agents that can then collaborate with each other in this drug discovery process. It’s pretty amazing stuff.

Dirk told me that they have scaled the system up. Obviously, there’s a ton of data when it comes to biologic compounds and genomics. And, there are currently over 440 million vectors. So, a great use of our approximate nearest neighbor index.

[55:21] Okay, so hopefully, I’ve given you a sense of why it’s important to use RAG. It’s the primary way that applications interact with large language models today – and the facilities that InterSystems has built to make it easy for you, as builders, to put this in your applications.

I’d also really encourage you to experiment and to build skills. And, we’re doing this at InterSystems as well. We’re upskilling our developers through training, through events, through hackathons.

We’re building internal projects. So, for each product capability you see, there’s more than one project that’s teaching us hands-on how to use this technology and helping us build best practices to help you be effective in building Gen AI systems and bringing them to market. And, many of those have become customer-facing. You’ll see this on our Developer Community, as well as Documentation, our Learning Site, and soon, Support as well.

And, we’re doing exploratory projects such as Graph RAG. So, I won’t go through all of these, but it’ll give you a sense that there is an education and culture change along with the adoption of the technology. And my call to action for you in the audience is to learn and try.

We have a pathway on Learning Services for learning how to use vector search and build RAG systems, learning how to get your data to be AI-ready. There’s a Developer Community getting-started project that you can use hands-on with vector search. There are best practices, and lots and lots of projects.

So, after this conference, go home and add a chatbot to your application, and talk to us about anything that will help you get smart about this and effective, so that AI can be part of your application tomorrow.

[57:34] Thanks very much. I’m going to turn it back now to Gokhan.

[57:44]

Gokhan: Thank you, Jeff, so much – great examples of what our customers are able to do with our technology. It’s always so exciting and inspiring, and thank you all for doing all these great things and really kind of imagining all the things that we are not able to imagine.

[58:03] And, we are trying to make this solutioning and development process even easier and more effective for you all the time. So, if you remember last summer, we announced our Data Fabric Studio as a fully managed cloud offer that enables our customers to build complex data and analytics solutions using low code, no code, and AI-assisted experiences.

And, in the last 12 months, we have continued to build up that offer by providing domain-specific capabilities and modules. Modules such as Supply Chain and Asset Management help our customers by providing out-of-the-box data connections, data models, transformations, pipelines, and reports – all out of the box – help our customers become even more productive to hellp solve domain-specific problems.

[59:05] The Data Fabric, in general, is an architecture pattern, and what our customers do with our solutions is build that architecture pattern in an easy-to-use, simple, integrated way. And, it can be a game-changer for a lot of people, because this enables building the AI models, AI solutions at scale. And, it can also be a game-changer in terms of building the trusted AI solutions that we all want and need.

[59:37] So, in the last 12 months, as I said, when we built up the Data Fabric Studio offer, we realized that our customers also needed domain-specific, industry-specific solutions. So, we started building these modules that are accelerators to really building up domain-specific capabilities with out-of-the-box connectors, data models, and data pipelines.

And, today, I’m very excited and happy to announce a new offer – the Health Module for Data Fabric Studio – and I’d like to invite Daniel Franco to the stage to tell you more about it. Thank you.

Data Fabric Studio with Health Model: Empowering Healthcare Through Simplified Data Solutions

Daniel Franco, Senior Manager, Product Management, InterSystems

[1:00:32]

Daniel: Thank you, Gokhan. I’m very excited to be here today to present a new offer.

In healthcare, InterSystems has been helping our customers to build out their data fabrics since long before the term even became fashionable. Our best example is HealthShare for building patient-centric data fabric with a comprehensive data model.

[1:00:57] Moreover, both our customers and InterSystems are committed to driving better outcomes – not only in the clinical and operational data but also in the enterprise and business level. To achieve that, customers must go beyond EHR or longitudinal health record. Workforce, consumer patterns, ERP, cost, logistics, facilities, call center, and other types of data must be available and integrated for further analysis.

For example, to understand the impact of staff and image machine availability on patient waiting times in the emergency department, we need at least three source of data: HR, staffing, and device maintenance. With this use case in mind, and many others like supporting freedom of information and regulatory reporting, we are developing the Health Module for the InterSystems Data Fabric Studio. This module works alongside the rest of our healthcare portfolio – supercharging what we already have, and adding more types of data, and putting provisioning in the hands of the business.

[1:02:18] Today, we are announcing a private early access program for the Health Module. You are able to request access to it through our InterSystems EAP page.

But what is this new offer? InterSystems Data Fabric Studio with Health Module is a data provisioning tool that offers a new approach to connect, control, persist, and analyze healthcare enterprise data – delivering it to the right person at the right time in a secure environment. This is a fully managed cloud offering, provided self-service via low-code, no-code user experience.

With the Health Module, you get business and system connectors, healthcare-related data models and data sets that you can use or expand for your needs, support for FHIR, and much more.

[1:03:15] Remember the use case we mentioned about the impacts of staffing and image machine availability on the emergency department waiting times? You know the data exists, where it is, and you have a target data model to fit the data into. You are anxious about accessing the data, as people’s lives might be at risk. At the same time, you have budget constraints, so guessing the actions you need to take is not an option.

That means you need trusted, curated data right away to make effective decisions. Instead, it takes weeks for IT to gather the data and integrate it. And, even after the data is gathered, it takes a lot of effort to keep the data up to date. And, it also requires strong data governance in place to control access.

With this use case in mind, let’s see a video on how our new offer can help you to access and provision curated data to analytics tools, leading to faster decisions.

[1:04:21] First, data is created through a pipeline such as this. This is an overview of an active system pipeline management screen. To create a trusted data pipeline, first, you need to connect your data. Let’s see how it works. In this use case, one of the main data sources is the EHR data. Using our pre-built data connectors, we can bring in emergency image orders and encounter data from (InterSystems) IntelliCare EHR just by configuring some parameters. You can also test the connection to it right away.

[1:04:56] Now that we have securely connected (InterSystems) Intellicare to InterSystems Data Fabric Studio, we can import the data schema we need – for example, emergency imaging orders. At this stage, we add only the table schema to the data catalog, where engineers and analysts can browse what is available to them. Both schemas and data models are visible through the data catalog, where you can view attributes and enrich your descriptions. The same workflow is applied to other sources, like workforce or image device repairs.

Now that you have access and understand our schema and target data models, we can build a pipeline in just a few steps. First, you stage the schema by selecting the fields we care about. Then we apply transformations – for example, turning a timestamp into a status.

We can then also validate the data to guarantee quality input. So every time the pipeline is running, you can easily spot and fix errors in your incoming data. We reconcile it if we are trying to join tables together to make sure everything links up correctly.

[1:06:22] Finally, we can promote it into your previously defined and uploaded custom target data model. Now, we are defining a new promotion activity. We can then create a new promotion item using a guided SQL statement user interface to select from a staging table what data we want to bring into our final data model.

Then, we select the schema that we want to use to feed this data into. And, later, we use the auto-map feature to quickly relate source and target fields to each other. Regardless the type of data, the same flow can be applied when creating pipelines. You can also leverage optional healthcare data set models and expand those.

[1:07:14] As you can see, you don’t need IT to prep the data or even disrupt existing systems. You can control when and how data flows in. Now that you have your pipeline set, you can bring your data in. Until then, you’re only working with the table schemas and catalog. You can run the pipeline manually, or you can also run on schedule. Now that your data is ready, you connect InterSystems Data Fabric Studio to any analytics tool of your choice – for example, Tableau. You can visualize the curated data that is coming from the pipeline that we just built and create your own dashboards.

[1:08:00] Let’s look at the MRI brain procedure, as this is a critical procedure. We see many completed within an hour, but just as many were canceled. But why? With Data Fabric Studio feeding curated data to Tableau, we can overlay imaging machine maintenance and analyze the repair for each machine and the staffing schedules. These data are also coming through the same pipelines.

What we find is clear. Procedures between 10 and 1 p.m. are being performed, but afterward, they are almost canceled. Why? The only staff certified to operate the MRI machine is in schedule beyond 1 p.m. With this insight, the fix is simple. Your organization must adjust the staffing schedule. And, the best part, as new data is produced, the pipeline automatically gets it for you. No need to re-upload data. You build once, you use many. You will easily see the impact of your actions reflected in your data in real time.

[1:09:07] Going back to Data Fabric Studio, another great module that can be added to it is the virtual assistant. Even before the data is persisted, the AI assistant helps users with proper governance to discover what’s available. It suggests useful tables, fields, and SQL expressions to get the data you want and plot the results in real visual outputs that make it easier to see.

For example, let’s try a question: Create a graph that shows the percentage of the procedures canceled by procedure type due to staff not being available. See – it’s there. Let’s recap what we did. First, we onboarded data from different systems using the HR tables as an example. Then, we created a pipeline following the stage – staging, transforming, validating, reconciling, and promoting to your custom target schema.

[1:10:11] We analyze your data using an external tool like Tableau, directly connected to InterSystems Data Fabric Studio. After that, we quickly review how the AI assistant can help you discover your data and get faster insights. As you can see, with Data Fabric Studio, you skip the delays. Business users easily access what they need directly, securely, and in real time – no more bottlenecks. And, additionally, you also have access to the virtual assistant module that can help you explore your data and get answers to complex questions. This is just a quick preview of our new private early access program.

[1:10:56] To dive deeper, don’t miss Judisma’s session on InterSystems Data Fabric with Health Module tomorrow at 2 p.m. Thank you very much, and see you in our sessions.

[1:11:09]

Gokhan: Thank you, Daniel, so much. It was great.

[1:11:16]

Gokhan: Well, such a great and exciting addition to our product portfolio and also to your toolbox as our customers.

Well, we can only highlight a few things on the keynote stage, but in the past 12 months, we have delivered a lot of other great capabilities for you.

Well, fully loaded release trains are coming to your station on time. And not only that, we have many, many release trains now – multiple release trains that are leaving the station from (InterSystems) IRIS Data Platform to Data Fabric Studio, our cloud-native data services, and also managed and professional services that we offer – all delivering great value to you.

[1:12:05] Well, in addition to all the new capabilities that we built, we did not lose sight of what are top priorities for you. Number one being trust, but number two – doing a lot more with less.

And, this graph that you're seeing is a courtesy of our great friends and partners at Epic Systems, where Epic has been able to clock more than 1.5 billion global references per second in a lab environment, and getting close to 200 million global references per second with a real customer in production.

This is amazing. This graph is a reflection of our dedication for making you ready for the future – today, tomorrow, and beyond.

[1:12:57] Well, in addition to that amazing throughput graph, Epic Systems in today's panel – Peter also said – they’re seeing up to 25% improvement in database response times year-over-year. This translates directly into better and faster experiences for our doctors and nurses, and better experiences on your .com websites. And, we've also done that by increasing the efficiency of the general platform in general.

Peter said 15 to 20% improvement in how much work we can do on a per-core basis. This means a lot less hardware, less frequent hardware upgrades, and better return on investment. This is also a highlight of our commitment to have you do a lot more with a lot less. Faster, cheaper, more scalable. And, this is not all. We have even more exciting pipeline – a great set of innovation that we're working on.

Tomorrow, we're going to have a session on data and AI platforms vision and roadmap, and we would love for you to see that. We'd love to see you there. And also a great set of EAPs that are ongoing. So, please do sign up for the EAPs and get a first-row seat into what our team is building. We would love to have your engagement, but also your feedback, so that we can build our joint future together.

[1:14:34] And, before I invite Scott back on the stage for some closing remarks, I want to personally say thank you again for imagining the things that we're not able to imagine, for building solutions that other people cannot build, and also making us first party in your personal journeys. Thank you so much again. Appreciate it.

[1:15:03]

Scott: Okay, so, the only thing between the break and you is me. So, I will be quick. There's one more thing. We talked a lot about different by design. We talked about new offers and all of the great things that we hope you can accomplish with (InterSystems) IRIS.

We also wanted to launch and announce at this event a reimagining of our partner program, and there'll be more information available on the partner portal. You can email Elizabeth Zaylor for more details.

But, for our SI partners, your success is our success. We're creating new training programs, new incentives, and new ways to work with us. And certainly, from the technology that you've seen today and the packaging around IDFS and some of the other cloud-based capabilities, we think there are now really unlimited opportunities for you to develop your IP and relationships and leverage IRIS inside.

[1:15:57] I'll leave you with this – ready? If you are here, you are. Thank you.