Choosing the Right Platform for Real-Time, Operational-Analytic, AI-Enabled Workloads

Modern data-driven enterprises demand platforms that deliver real-time insights, support AI and GenAI initiatives, and ensure governed, secure access to consistent, trusted data, without introducing more data silos.

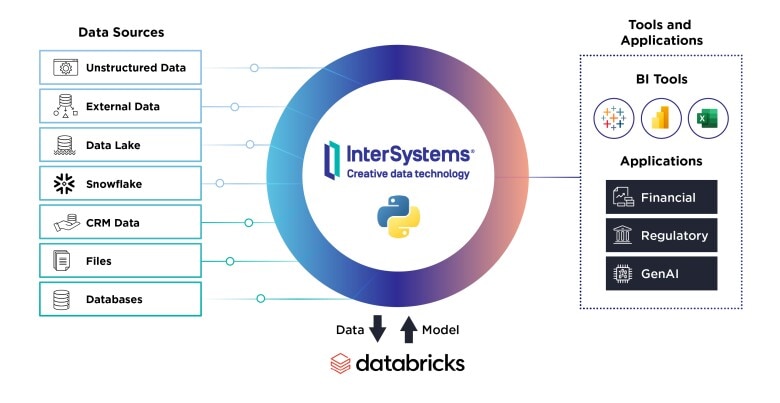

InterSystems IRIS® and InterSystems Data Studio™ are purpose-built to meet these needs, enabling organizations to integrate and harmonize data from diverse sources into a single, AI-enabled, real-time platform.

Databricks, by contrast, is an analytics and machine learning platform optimized for cloud-scale batch processing, data science, and model training in data lakehouse environments.

While these platforms serve different primary use cases, they can be highly complementary. InterSystems provides trusted, governed data in real time for analytics and modeling in Databricks, while Databricks enables development and maintenance of large-scale analytical models that can be deployed into real time, connected, operational workflows in InterSystems.

Comparison of Key Attributes

Attribute |

InterSystems |

Databricks |

| Primary Users | Application developers, integration engineers, data engineers, data stewards, analysts | Data engineers, data scientists, machine learning engineers |

| Primary Workloads | Optimized for real-time, high-performance operational-analytical (ACID compliant HTAP / translytical) workloads | Optimized for batch processing and analytics; limited real-time support |

| AI-Ready Single Source of Truth | Data fabric architecture enables dynamic, consistent, real-time access across disparate sources without requiring data duplication to create an AI ready single source of truth | Optimized for batch analytics workloads, not as an AI ready real-time single source of truth |

| Deployment Flexibility | Supports on-premises, public and private cloud, and hybrid deployments | Cloud-only (Azure, AWS, GCP) |

| Lakehouse Support | Supports high-performance real-time and batch lakehouse use cases | Pioneered lakehouse architecture; optimized for analytical, not operational, workloads |

| Multi-model Support | Supports a wide variety of data types and models without duplication or mapping, including relational, key-value, document, text, object, array, etc. | Optimized for data transformed into Delta Lake format |

| Low-Code / No-Code Interfaces | Unified UI with built-in low-code and no-code tools | Primarily code-first; Spark expertise and scripting typically required; minimal low-code support |

| Performance at Scale | Proven extreme high performance at scale in mission-critical, highly regulated industries (e.g., healthcare, finance, government) for operational, analytical, and real-time operational-analytical workloads | High performance with Photon for analytical workloads; not optimized for low latency use cases. Performance problems at scale with Neon DB. |

| Operational Simplicity | Integrated platform and services simplify deployment, configuration, and management | Requires manual setup for clusters, jobs, and orchestration pipelines |

| Security & Governance | Built-in governance and security across virtual and persistent data; industry specific capabilities for healthcare, government, and financial services | Built-in governance via Unity Catalog; complex setup required |

| Real-Time Streaming / Ingestion | Native support for real-time sources; extremely low latency for ingestion and concurrent analytics on real-time data | Structured Streaming and Auto Loader available; high latency for analytics processing on real-time data |

| Model Deployment / MLOps | Operationalize models from any frameworks, including Databricks, directly into live connected workflows | End-to-end ML lifecycle support via integration with open source MLflow |

Complementary, Not Competitive

InterSystems and Databricks

InterSystems and Databricks enable a complete pipeline from raw data to real-time AI-driven decisions and actions.